ᕕʕ •ᴥ•ʔ୨ Shank Space

[PROJ] Ramukaka

Aug 06, 2023Really Advanced Most Useful Kollaborative Assistant Kollaboratively Assisting

Background

- It is the year 2012, Avengers is out and you are completely impressed with J.A.R.V.I.S. The amount of work you could get done with such a system beside you as a Second Brain is fascinating. You wonder what it would take to build it.

It is the year 2015, Avengers: Age of Ultron is out and you are completely impressed with J.A.R.V.I.S. Tony Stark drops the following quip:

Started out, J.A.R.V.I.S. was just a natural language UI. Now he runs the Iron Legion. He runs more of the business than anyone besides Pepper.

- It is the year 2022, ChatGPT is out and you are completely impressed with LLMs.

There was an idea…

Imagine an assistant on your device. Which you don’t have to request to do tasks, but it just does.

Without prompting. Without a wake word.

Always working for you, always in the the background. Like the best of butlers. And when you need something, you just have to “glance” ;) at it. And it is there.

Vision (V.I.S.I.O.N?)

An immersive holographic UI with information floating around you in a dynamic graph of importance, with the most relevant and useful information bubbling up and the lower priority ones sinking and vanishing away.

We are getting there with the Apple Vision Pro, but not in a 1 day hack. We are limited to primitive screen for now. So, we have a dedicated screen, let’s say.

The actual idea, for sure this time

An always active Spotlight Search essentially. Imagine you have a second display always displaying a bunch of widgets and active context. This secondary display is connected to your primary device, say kept just beside the primary display. So the widgets are literally half a head turn away. They have the standard weather, calendar, time, etc widgets to start with.

Now, Ramukaka is always active, always listening. The first version will be “listening” to all your key presses. A key-logger, yes. And sending all the click stream data to the internal processor of Ramukaka.

Which is on-device. Or near-device at-least. Not going away into the internet.

The internal processor is where the magic happens. An on-device LLM decodes the key presses to build a context of what you are working on right now. And it is able to power some integrations like:

- You are in terminal and start typing

kubectland it shows recently used commands, you start typingdockerand it shows helpful autocompletes (which you can copy over from the window with a key combo, sayCMD/Ctrl + Alt + C) - You are chatting with someone and type in Slack $450, the conversion to your local currency is there for you on the screen already.

- You are talking about planning a trip to Wayanad, and the weather report, places to stay, stuff to do starts coming up on the second screen for the dates you chose before you even went to the search engine to input the query.

The idea boils down to removing the hops of a wake-word or a query input because if you are working or operating a device for atleast a while, it should be fairly easy to build context on what you want to search for looking at your prior interactions

Implementation Bullets

- At the minimum we will need a small enough LLM to run on the device

- Primary task being to parse the raw click stream to actionable contexts

- Also to maybe answer some general questions, working in parallel with the next point

- Some instant answers for typical use cases that can bypass the LLM core for answers

- DuckDuckGo Instant Answers style. Which work pretty well with Regex Matching

- API support for fetching and combining data. Especially for early run widgets on display

- Standard APIs for weather, time, etc.

- Dedicated APIs for fetching information from the Calendar, Contacts, Phone, etc.

- Dynamic scoring logic

- Which combines the following attributes of a content piece: importance, relevance, lifetime, repeatability

- Constantly updating the scores, hence the visual representation, for that sweet holographic AI look

- A graph UI for keeping all the information in view.

- And you can tap in to zoom into specifics of the information (or pinch…)

- Dedicated hardware with screen

- A raspberry PI with a display?

- Do we need a dedicated device? Can it be a program that is running in the background on the laptop, and you have a second screen attached pointing to a view of the program?

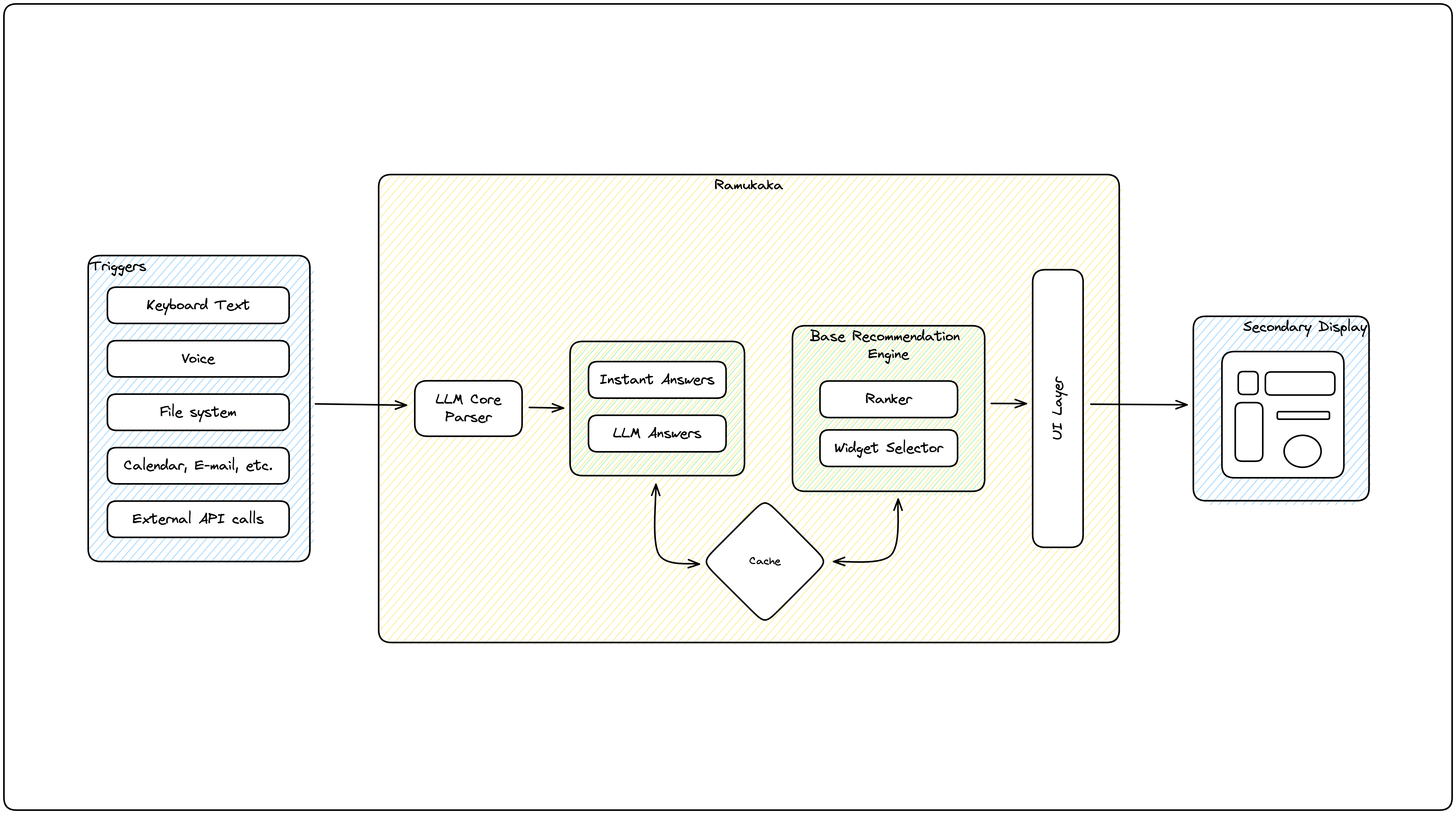

Architecture

Getting to a Minimum Viable Product

We (the royal version) will use Streamlit to build the UI. Because we do not have the necessary frontend experience to build a slick moving-graph UI. What we do have experience in is backend systems, so we will stick to Python. And concentrate on a crucial part of the working - a dynamic scoring logic for content pieces.

Later, we will work on integrating with an LLM for parsing the input keyboard stream. Need to figure out the appropriate prompts and such.

The Decay Score

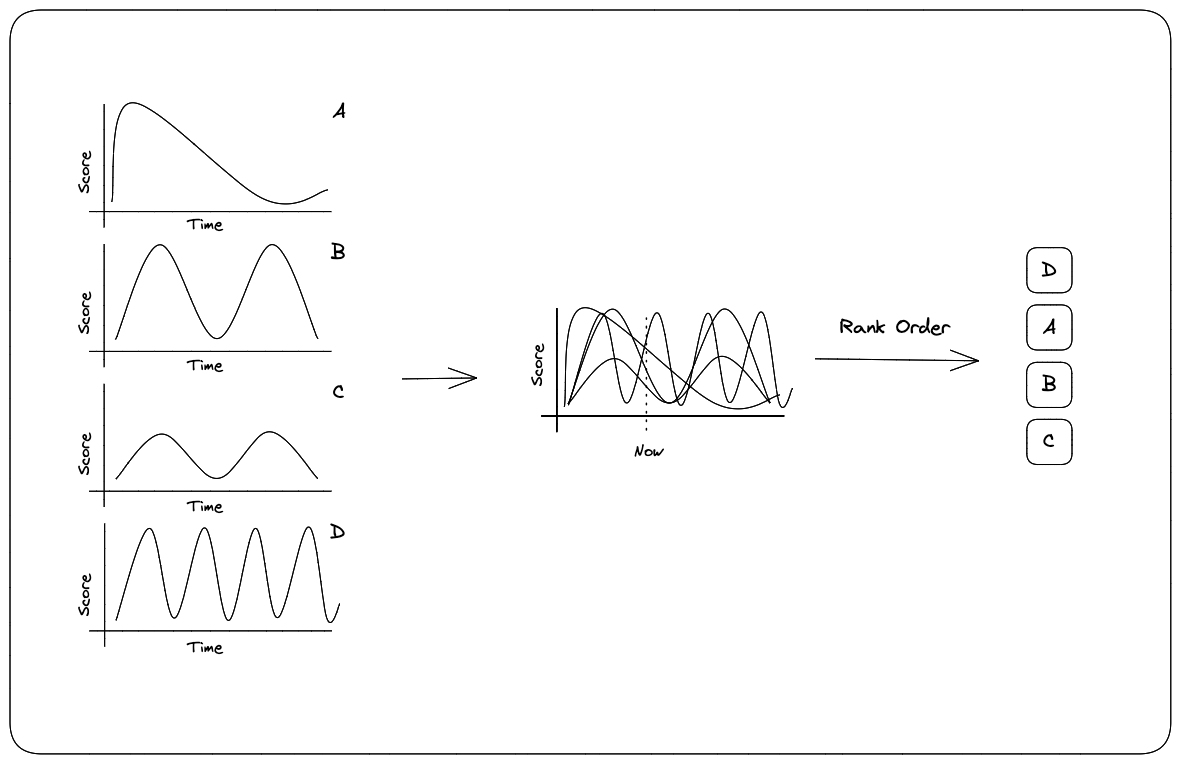

The scoring of the items needs to be dynamic, in the sense we don’t want to go back to the recommendation engine every second to fetch a new set of scores for all the items. The score defines how “big” an item will be in the moving-graph UI, essentially the weight of the node. And hence needs to have smooth increments.

Hence, the recommendation engine is not only going to predict a score for all the items but also a set of decay parameters for each item. These decay parameters are used to build a decay curve on the time axis.

The decay curves might look like so for 4 content pieces:

When you overlay the curves, at any given point we get a rank order of the items. As the time progresses onwards, the final ranking will changes, as the curves move relative to each other.

Some items can have high periodicity, some may have high priority but only for a limited time, some are lower priority background items best suited for fallback. All of these can be encoded with a score and a set of decay parameters to construct the decay curve.

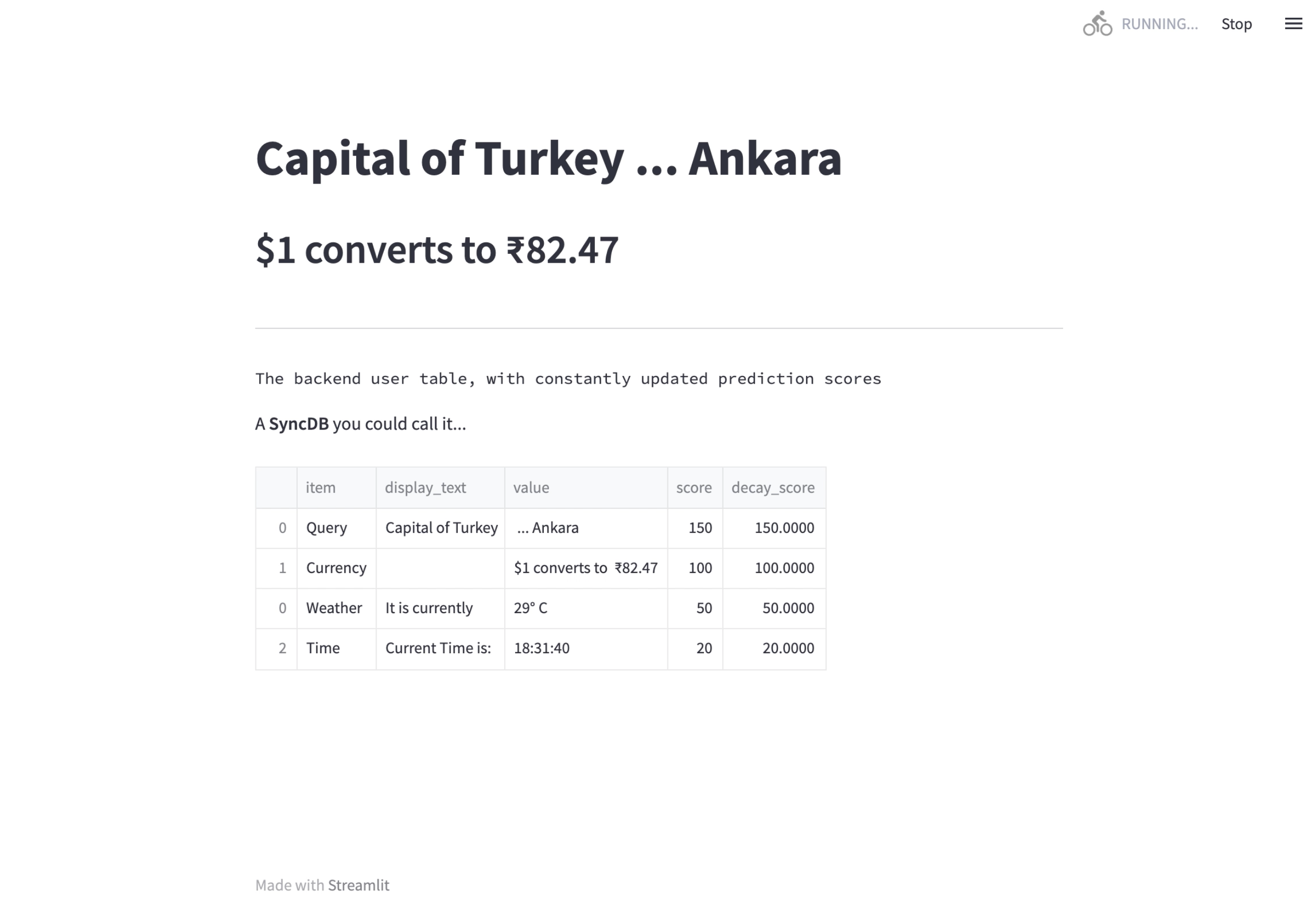

A screen-shot of Ramukaka

Above the horizontal line is the actual display. The font size is mimicking the importance of information. The scene here is, we have Ramukaka running and are chatting about going to Turkey. So Ramukaka has surfaced what it thinks is relevant - the capital of Turkey.

A screen-capture of Ramukaka

Like, Share and Follow for updates on Ramukaka.